About one year without writing so, this is the first of three very verbose posts about shadow particles…

One year and a half ago Félix and me were talking about how we could implement a good shading effect for a particle system made in Flash. In those days people could render 100.000 particles with no problem using point lighting or color shading based on the position of the particle, but we wanted something more, and there is when we started to talk about shadows on particles.

Shadowing the particles was not an easy task (for Flash in those days), but we could find very useful information about it in the web, the GPU GEMS has a very good article about rendering volume objects, and we also found a very handy paper about smoke particles written by Nvidia.

Both articles talked about the “half angle rendering”, this kind of rendering uses the half angle between the view direction and the light direction to sort the particles and calculate the shadows for them. The bad news about it is that we must sort the particles with that algorithm.

We tried to implement the algorithm in Flash but it was a total failure, because of that we parked the idea for a not so immediate future. Some time later we got news about webGL and Stage3D (Molehill) so we got very excited about the idea of having hardware acceleration (and the possibility to implement the shadows). Even though we still love Flash (with its future acceleration).

Two weeks ago we finally got some time to play around with webGL, so we started to learn the insides of it, we really recommend the reading of this page, it has many tuttotials that actually helped us. We also took a look to the OpenGL ES 2.0 Specification in order to see what functions we could for the shading.

Another good thing to get in touch with webGL is to read to “classes” of the Three.js Framework, these are WebGLRenderer and WebGLShaders, the first one is very explicit of how to render objects with webGL, but the second one is a must to read, this class wraps all the shaders written and you can explore all the shaders that Three.js has to get an idea of how to program your own shaders.

Shadow Particles, “our” aproach:

Shadowing particles is quite easy in OpenGL if you have all the extensions that it offers in the desktop (the full OpenGL version), but in the web (OpenGL ES 2.0) there are some issues that has to be solved to make it work, these issues are:

– There is no 3d Texture in webGL.

– There is no gl_FragData in the fragment shader.

– Sorting particles in the web should be done by Javascript or in the best case with the graphic card.

If we don´t have 3d Textures we can´t save the shadow information in layers as we first wanted, by the other hand if there is no way to use gl_FragData in the fragment shader we must render the different information in different passes for each channel data we want. Finally we decided that sorting in JS is not an option, and trying to sort in the graphic card is one task we didn´t even consider (too hard for us ☹).

So at the end we decided to use the following algorithm:

– All the particles would be “part” of one “object”, by this we mean that we would make only one call to the graphic card to paint all the particles, we would provide one array buffer of the quantity of the particles and their initial positions. This means that all the animations would be made in the vertex shader.

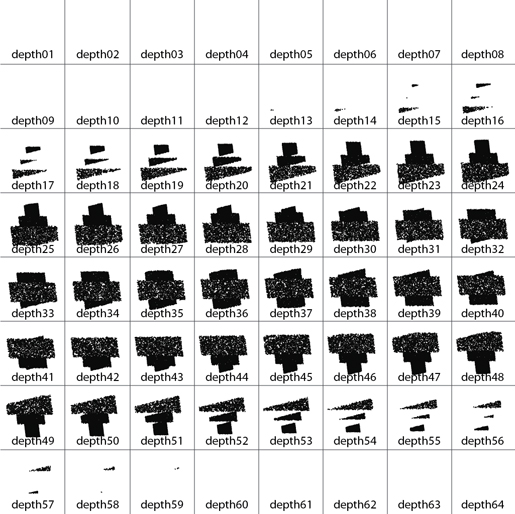

– Render the shadow information in a layered 2D texture: we would use a 8×8 layered texture in order to have 64 shadow layers, the main idea behind it is that each layer would represent the shadow that affects the next layer. It is like if you slice the volume in parts and you render the shadow that the first part gives to the second, the second to the third and so on.

– Use Render to texture from a framebuffer to define the 2D shadow texure: since OpenGL Es gives us the possibility to use framebuffers we thought that we should render the information in one buffer in order to get the texture. In the next pass we could use the texture in the shaders to define the points colors.

– Use a second pass to define the final color of the particles and paint them the window framebuffer.

So as you could read before we would use two passes rendering all the particles at once, the first pass would get the shadow information and the final pass would render the particles with the “correct” color.

Rendering the shadow map:

The shadow map that we wanted to obtain is something like the following picture, it has 64 “buckets” for 64 different depth defined with transforming the vertex positions to the light UCS. Once the transformation is done the 64 layers must be between the minimum and the maximum z of the volume containing all the particles.

The bad news of this is that you must have a bounding box to define the zMin and the zMax, and it gets worst when you animate your geometry. To solve this instead of a bounding box we decided to use a bounding sphere that could give us one measure (the ratio) that contains all the geometry, this is how the “shadowBoundingRatio” was born.

The “shadowBoundingRatio” is a ratio defined by the user (the programmer) based on the geometry that is going to be renderer (if you are going to animate the geometry there are some hacks that could be done to properly change this variable).

Now that we have one way to define the 64 depth steps, we only have to use the vertex shader to render the particles in their bucket, the bucket is defined using these equations:

desface.z = floor(steps * (gl_Position.z – uShadowRatio + uBoundingRadio) / (2.0 * uBoundingRadio));

desface.x = mod(desface.z, sqrtSteps);

desface.y = floor(desface.z / sqrtSteps);

The first line of code define the actual depth step of the particle based on the shadowBoundingRatio (uniform uBoundingRadio in the vertex shader) and the uShadowRatio (we explain it later). The second and third line define the x and y bucket position from 0 to 7 in the 64 buckets texture to properly render the particle.

The uniform variable uShadowRatio is the equivalent of the camera distance from one target. It allows us to adapt the slice render to the position in the texture (think of it of the only way that you could see all the information without mixing it in other buckets o rendering it too small).

Once the depth step and the actual bucket are defined we only have to place the particle in the framebuffer, we alter the gl_Position of the particle using the previous information, so getting the actual bucket and defining the gl_Position is made with this function in the vertex shader:

void getDepthSlice() {

eyeVector = uLightTransformMatrix * eyeVector;

gl_Position = uPMatrix * eyeVector;

desface.z = floor(steps * (gl_Position.z – uShadowRatio + uBoundingRadio) / (2.0 * uBoundingRadio));

desface.x = mod(desface.z, sqrtSteps);

desface.y = floor(desface.z / sqrtSteps);

gl_Position.x -= gl_Position.w;

gl_Position.x += gl_Position.w / sqrtSteps;

gl_Position.x += 2.0 * gl_Position.w * desface.x / sqrtSteps;

gl_Position.y += gl_Position.w;

gl_Position.y -= gl_Position.w / sqrt(steps);

gl_Position.y -= 2.0 * gl_Position.w * desface.y / sqrtSteps;

}

Moving each vertex requires to know that the each position is normalized, this means that the each factor goes from the [0-1] range. The final position is defined by this equation:

X = 0.5 * (gl_Position.x / gl_Position.w + 1.0) * ViewportSize

Y = 0.5 * (gl_Position.y / gl_Position.w + 1.0) * ViewportSize

So every move have to be done taking into account the “w” factor of the position, that is why we move the particles relative to the “w” factor in the previous function. A further reading of this is highly recommended here.

With the previous function in the vertex shader we obtain one framebuffer that we could render to a texture for the next render pass, you can see one example of it clicking in the next image. This example is the shadow map for the main example, so you could se how the shadow changes because of the light´s movement.

The last example shows a white map with black particles, actually in the framebuffer we use a vec4(0.0, 0.0, 0.0, 0.0) rgba background color, and the particles are painted with a 1.0 / 64.0 * vec4(1.0, 1.0, 1.0, 0.0) particle color. We make this so if we want that a particle has no light incidence all the 64 layers should give shadow to the particle, the less layers affect the particle position, the less dark the shadow would be in the actual particle.

Once the particles are draw in the framebuffer we only have to get the final texture to the next pass, one good example of how to make this appears in this tutorial from LearningWebGL.

Rendering the second pass:

The second pass is used to simulate the light interaction with each particle, since particles does not have a normal not very much can be done with real lights simulations, but one thing can be done and is to define a light attenuation based on the distance from the particle to the light source. If the distance is defined with the light position, we can get a point light source, if it is defined with the plane conformed with the light vector (light.target – light.position) and the light position, we can define a direction light.

Since the shadows in the layers are renderer parallel to each layer, the final shadows are relative to a directional light source, but in our approximation we have a mix of point light attenuation and directional lights shadows (this is because the point to point is easier to calculate than a plane to point distance). But if you want to be rigorous you can change the attenuation distance calculation for a direction light.

The final attenuation is by definition the square of the distance obtained (via directional or point light), but it can be defined by any power of a fractional number to obtain a desired effect (linear attenuation, square attenuation, cubic attenuation).

To change the shadow darkness you can change the divider of the vec4 color used in the vertex shader (the 1.0/64.0), if the 64.0 divisor is slower you would have darker shadows, higher values would make the shadow softer.

If you think the attenuation is right, and the shadows are fine, but the whole thing is too dark, you can always perform an ambient light (adding a fractional color to all the particles) after you implement the shadow and attenuation.

Not everything is perfect….

The second pass has a little issue that we will implement for a future post, each particle has a shadow contribution of all the previous depth layers, so you have to read the shadow information from all the previous layers for each particle and them sum the collected information to get the shadow intensity for this particle.

This means that “for” bucles must be used in the vertex shader, so for the first depth there are no contributions of previous depths but for the n depth we have a contribution of (n-1) depths, and this make the shading quite slowly compared to the only use of the attenuation. How slowly does it make it, well if we calculate the sum of n from n = 1 to n = 64 we have 2048 passes (it is not THAT bad), but this number has to be divided by the total depths (64) so it gives us that it has a 32 textures readings for each depth (this slows down the performance a lot).

There is a work around to this problem, and it is as simple as to apply 64 more passes to the framebuffer to sum the individual buckets, so the first bucket would have no information, the second would be the sum of the first and the second, the third would be the sum of the first, the second and the third and so on.

In each step of the composition we add to the next bucket the previous sum of the previous step, each bucket would have all the information in itself. This means that we do not need to use the “for” bucles to read the texture and sum the components in the shader. Another benefit of this composition is that the final bucket contains all the shadow information that the volume produce on one surface, so you can project the shadow from the particles to any object in your scene.

This optimization of the code also allow us to redefine the second rendering pass in order to recreate a blending among the particles, we can render each depth with its final color like we did in the shadow texture and then we would have the particles sorted in layers. Finally we only would add each part of the frameBuffer color texture to get the proper blending to transparent particles.

There is another hack for the “for” issues, it´s actually what we are doing in this example, and it´s to “assume” that the stronger shadows for one leyer are the ones closest to it, so you don´t have to sum all the previous layers for one depth, but a limit of them (we are using only ten layers). The drawback of this hack is that it gives many artifacts (but it´s a little faster).

In the next post we will make the improvements we have talked before, a second post will talk about the composition in the frameBuffer (for the shadow texture and the second pass of the color texture), and finally a third post will talk about how to use this algorithm to animate particles (taking into account the limitations of the bounding sphere ratio), and how to render the particles shadow to a surface.

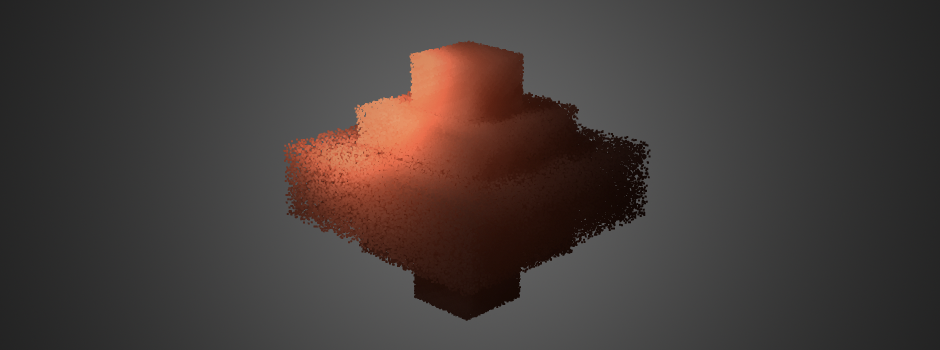

For this time we have a little example that shows all we have discussed in this post, one simple volume that you can press the scene to drag the particles, turning on and off the light attenuation and shadows to see the shading on the them. You can see it clicking on the image at the beginning of the post.