For one of the latest projects I was working with Drew and Maxime we were working on a virtual try on used to test headphones sets using AR, the app was based on the Beyond Reality Face Tracking library used to make real time face tracking from a video feed. This library provides a complete solution called ARTO which provides very nice results in terms on 3d positioning and compositing elements with the video from a webcam.

The idea is to use an occlusion mesh that hides the parts from the element to display, allowing to have a really nice integration with the real face from the feed. BRFv5 provides many examples with 3D options to be able to create the same level of integration as displayed in their demos, but the library only takes care of the face recognition and the 3d positioning, rotation and scaling from the occlusion mesh and model to display.

This means that the library doesn’t gives any information on the lighting from the scene, which can produce awkward results since the virtual lighting should match the real one in the video feed to have a more proper integration between the 3d elements and the face displayed. In order to solve it was decided that the project should find a way to calculate a way of lighting the 3d model to make it look more realistic.

The models should also display shadows generated from the own face, which required to define a light source that would cast the shadows, and the intensity of the shadows should be modulated based on the background light, avoiding to display too dark shadows when the background is well lighted.

Algorithm

One of the things that can be observed when working with virtual try-ons is that the face tends to encompass a big part of the view from the video feed, meaning that each frame could be treated as two planes, one containing the face information (near plane), and a second one containing the background information. (far plane)

The far plane can be used to define the amount of digital ambient light used. This can be done evaluating the pixels from the image that are not part of the face recognized, using the face occlusion mesh as a mask to remove the face pixels from the video feed, The remaining fragments are transformed from RGB to HSL where the lightness information is used to evaluate the median from the lightness of each pixel to establish a naive approach from the light intensity of the background.

The fragments from the near plane can mapped to the normals rendered in a 2D framebuffer, and the normals can be related to the lightness information from each pixel. Each fragment is evaluated against a threshold where the ones below it are discarded. This process isolates the regions of the face that receive more direct lighting. Finally another reduction process is done to obtain the median normal value from the previous region which defined the direction from where the light comes from.

GPU steps

To implement the previous algorithm different GPU draw calls are used, being described below:

– Render the 3d face occlusion mesh from the position, rotation and scale obtained from the tracking library, and generate two textures where one contains the fragments relative to the background, and the other texture contains the fragments for the face. These fragments are converted from RGB to HSL. The face texture will encode the normals from the 3d face and the lightness information of the video feed for the corresponding face fragment.

– Make a reduction process on the background fragments texture to evaluate the median lightness, these are a series of passes, much like a mip mapping. An actual mipmapping is not used to be able to discard the fragments that don’t contain background information. The ambient light intensity can be defined in the range [0 – 1] which corresponds to the median obtained in the final reduction.

– Make the same reduction process for the face texture, obtaining the median lightness for the face’s fragments, this median value in the range [0 – 1] can be used to modulate the intensity of the point light used as the main light source that generate the shadows. Only the fragments with normal and lightness information should be taken into account.

– Make a third reduction process with the face texture, the reduction is done from the fragments that are above a certain threshold defining the regions previously commented in the algorithm, the idea is to use the reduction to calculate the median normal direction from the region(s) more lighted. The light source position then can be defined using the head as an origin of coordinates and displacing the light source on an arbitrary distance using the normal found, the distance can be calculated by trial and error using the intensity defined from the previous face reduction and comparing the shadows obtained with real light examples.

With this simple process the 3d displayed elements can be rendered using a main light source where its position and intensity is defined by the face information and ambient light to modulate the fill light and the shadow darkness obtained from the background information.

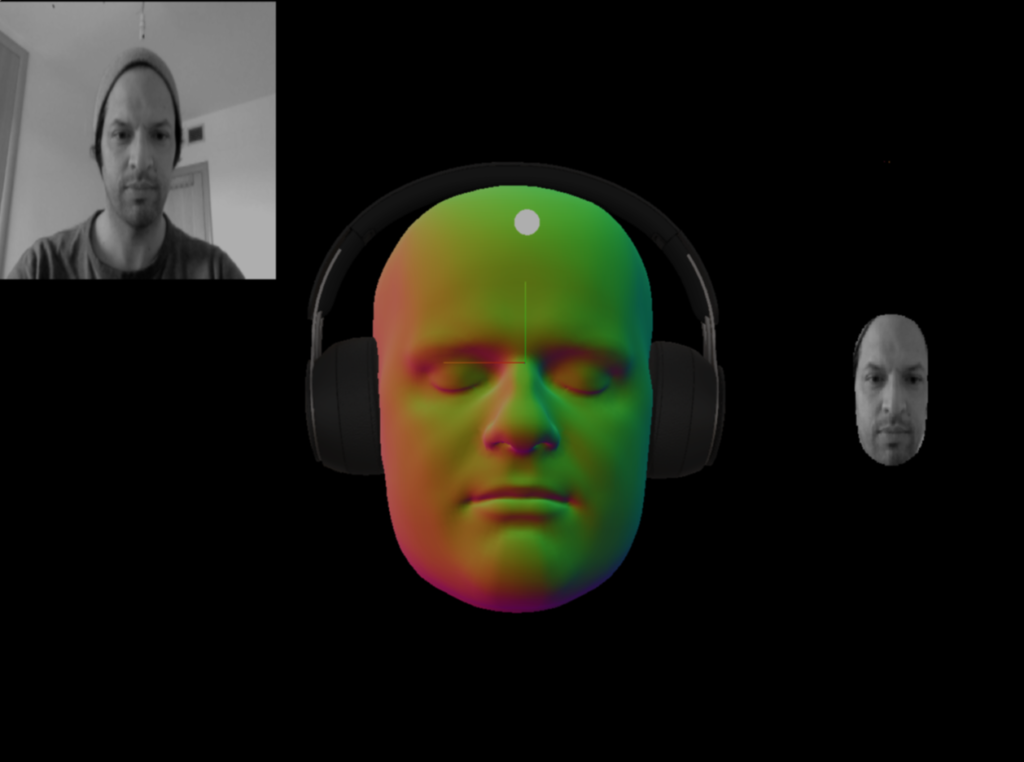

In the previous debug mode you can see how the image is transformed from RGB TO HSL and then the 3d mesh isolates the section of the image where the face is allocated. Since there are no fragments that are above the threshold the main light source is placed in front of the face.

Once some fragments are above the threshold the corresponding region is isolated and the median normal is defined from the region. You can see from the original image in the top left corner where the light is coming from.

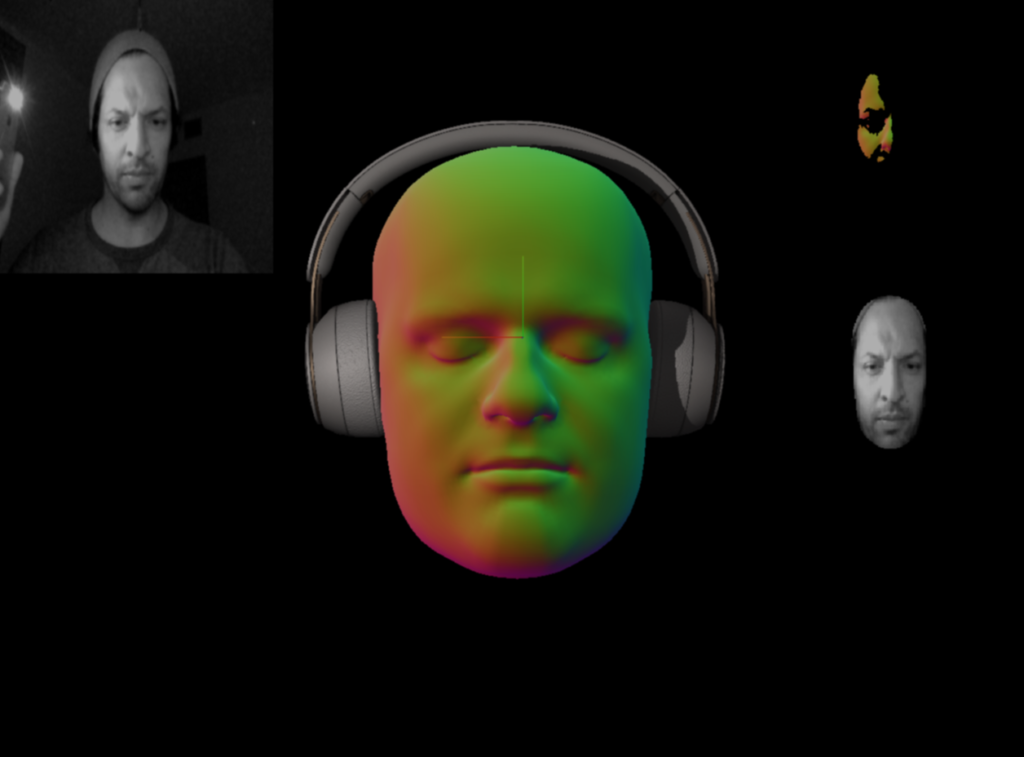

The intensity of the shadow is defined by the background which is not displayed in the debug view, but the top left image do show that the face only receives the light coming from the phone and no indirect lighting, this makes the shadows from the headphones darker since there’s no ambient light.

Caveats

This approach is a naive implementation that gives nice results in a quite variety of scenarios, but it has some caveats, first of all the fragments of the face are compared agains a threshold where its value is set arbitrary, there should be a more technical way to define which fragments should be accepted and which ones discarded, the algorithm also evaluates the median from a region assuming that the whole lighting can be simplified as a single main light source.

Since the reduction is made with different drawing steps the algorithm could obtain the median normal from different regions. The idea is to read the values from the second level from the reduction process, a texture of 2×2 fragments, that defines four normals with different directions. Four light sources can be placed defining the intensity as one quarter of the intensity of a single main light using the normals obtained from this texture. This will generate shadows that could be facing opposite directions, something that can’t be replicated with a single light source.

The algorithm can’t define the light color, which is assumed as full white, instead it uses a small section from the top right corner of the video feed to have color information from the background, this small sample is used to create a cube texture used as an environment map that can modify the materials behaviors from the element to display, it does assume that the whole background will be similar from the sample gathered, which is quite effective for closed indoor spaces. This assumption works well since the face is much prominent than the background in the video feed, this makes the 3d elements blend well with what is represented from the background displayed, not the actual real scene.

The algorithm can only obtain main light sources that are in from of the face, if a light source is behind the head it will be considered part of the background since it’s not lighting the face directly, this gives some edge cases where the lights are illuminating the cheeks or ears prominently, in this case the virtual light positions won’t reflect that type of light expected since the median of the region won’t account zones that are not reflected in the normals texture.

Results

The lighting results are really good for a variety of scenarios, where dark background with a single light source can be replicated really well, shadows adapt well to the lighting conditions of the background and the light position don’t glitch over time making the results stable with moving lights. This approach can be easily implemented in mobile devices since it does not use Float textures, the lightness information is encoded as a value on a range from [0 – 1], using unsigned bytes read in Javascript using the readPixel command from webGL, which provides up to 256 levels of intensity for the light, which is quite good for the requirements of the project.

The following images show the result under different lighting conditions in the same environment.