Before reading any further…

The author assumes that the reader understands the implementation of the marching cubes algorithm in the CPU, also has knowledge on how to perform a stream compaction over a texture using histopyramids. Please refer to http://paulbourke.net/geometry/polygonise/ and http://www.miaumiau.cat/2016/10/stream-compaction-in-webgl/ for more information about these subjects.

Marching Cubes Steps:

The marching cubes algorithm can be separated in two steps which are the active voxels allocation and the vertices/normals generation. The first step uses a iso level value that defines a surface from the potential, where the active voxels are the ones that are intersected by the corresponding generated surface. To check the intersections all the edges from each voxel are evaluated.

An intersection is obtained if the potential value from one of the corners of the edge is below than the iso level and the other corner’s potential value is above. The different intersections represent one of the 256 possible combinations defined in the marching cubes implementation. These combinations, expressed in tables, help to know the total amount of vertices to generate, and the edges where these vertices would be allocated.

The second step is responsible to generate the vertices positions and normals in the active voxels, this is done taking into account the quantity of vertices/triangles required for the case obtained in the previous step. This pass allocates the edge where the the vertex will be placed, and sets the vertex position with a linear interpolation between the two corners from the edge using the iso level as an interpolator.

To apply the previous two steps explained it’s important to translate the different tables in the implementation from Paul Bourke into two textures.

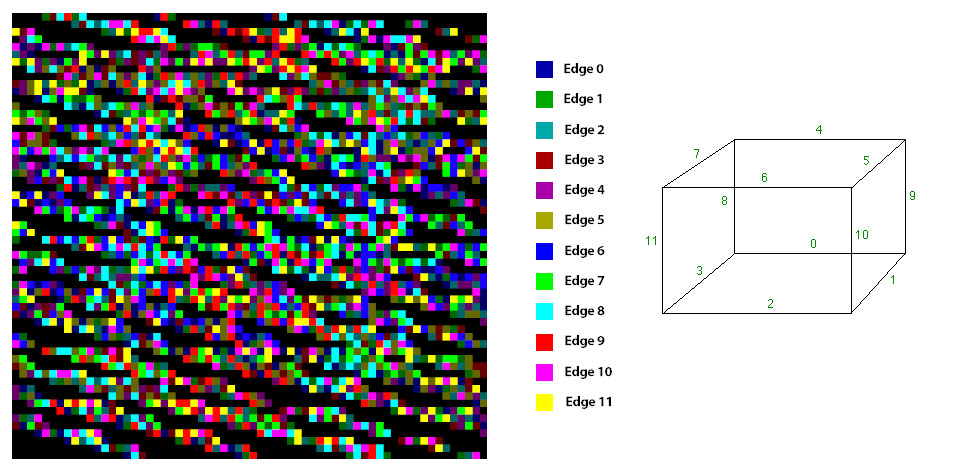

The first texture saves the 15 possible vertices to generate for each of the 256 combinations presented in the marching cubes, the data can be obtained from the “triTable” found here. This two dimensional data table is expanded in a one dimensional array containing 3840 values (notice that 16th value from each dimension is always -1, so this last one is always discarded), and then allocated inside a 64×64 2D floating point texture. Each fragment saves the corresponding edge where the vertex would be generated in the active voxel where values with -1 represent that no vertices need to be created for that edge. The resulting texture is shown below.

The previous texture is a color encoded visualization from the original floating point texture, each square represents a position where the color defines the edge where the vertex will be allocated. Notice that every 15 squares a new combination is defined, and for each combination the colored squares represent the vertices to generate, and the black ones the “-1” values meaning that there’s no more data needed for that combination.

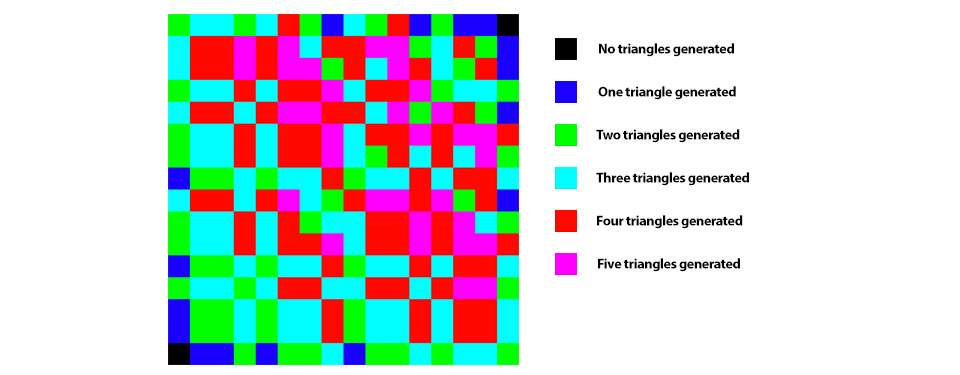

The second texture to create is also based from the “triTable” used before, but with a different interpretation, the idea is to save the amount to triangles to generate on each one of the cases, these 256 values are saved inside a 16×16 2D floating point texture where all the channels contain the same value; the amount of triangles to generate for each case can be obtained counting the values that are different from -1 and dividing the result by three. The following texture is shows the visual representation of the data.

Active Voxels Evaluation:

With the two previous textures it’s time to evaluate the active voxels, to do so the 3D expanded texture is used to check a condition where each corner of the voxels is tested to evaluate if the corresponding value is below the iso level required. The implementation from Paul Bourke uses bit masking to define which case is used based on a 12 bit number; in the current implementation, a floating point sum is used to represent the case that defines the triangles to generate since GLSL doesn’t allow bit manipulation. The process is done drawing a quad where the fragment shader evaluates all the voxels defined in the 2D texture to evaluate, doing so the whole 3D voxel space is evaluated at once. The following code executes the active voxels search.

precision mediump sampler2D;

//Texture container the potential values in the corners of the voxels.

uniform sampler2D uCornersTexture;

//Texture holding the numbers of triangles to generate on each of the 256 cases from the MC

uniform sampler2D uTrianglesIndex;

//Iso level used to define the surface required from the potential.

uniform float uRange;

varying vec2 vText;

//Constants used to simulate the 3D texture in a 2D texture

const vec4 C_3D = vec4(1. / 4096., 256., 16., 1. / 16.);

//Function used to evaluate the 2D index from a 3D position.

vec2 index2D(vec3 pos) {

}

void main(void) {

vec2 position = floor(vText / C_3D.x);

vec3 pos3D = vec3(mod(position.y, C_3D.y), C_3D.z * floor(position.y / C_3D.y) + floor(position.x / C_3D.y), mod(position.x, C_3D.y));

//The MC case to use in the voxel evaluated is calculated as the sum of corners that are below the iso level required.

float c = step(texture2D(uCornersTexture, index2D(pos3D)).r, uRange)

+ 2. * step( texture2D(uCornersTexture, index2D(pos3D + vec3(1., 0., 0.))).r, uRange)

+ 4. * step( texture2D(uCornersTexture, index2D(pos3D + vec3(1., 1., 0.))).r, uRange)

+ 8. * step( texture2D(uCornersTexture, index2D(pos3D + vec3(0., 1., 0.))).r, uRange)

+ 16. * step( texture2D(uCornersTexture, index2D(pos3D + vec3(0., 0., 1.))).r, uRange)

+ 32. * step( texture2D(uCornersTexture, index2D(pos3D + vec3(1., 0., 1.))).r, uRange)

+ 64. * step( texture2D(uCornersTexture, index2D(pos3D + vec3(1., 1., 1.))).r, uRange)

+ 128. * step( texture2D(uCornersTexture, index2D(pos3D + vec3(0., 1., 1.))).r, uRange);

c *= step(c, 254.);

//The total triangles to generate are obtained knowing which one of the 256 cases is required and reading the

//amount triangles from the 16×16 texture provided.

float totalTrianglesToGenerate = texture2D(uTrianglesIndex, vec2(mod(c, 16.), floor(c / 16.)) / 16.).r;

//The resulting fragment saves the amount of triangles to generate and the MC case obtained.

gl_FragColor = vec4(vec3(totalTrianglesToGenerate * 3.), c);

}

The previous shader works over all the fragments generated on the quad, where its UV position is used as a key to obtain the 3D position of the voxel simulated in the texture 2D, this is done using the data from the C_3D constants previously explained.

The sampler uTrianglesIndex is the second texture commented at the beginning of the post. Notice that the indexing is based on the actual marching cubes case obtained from the corresponding voxel/fragment. The variable “uCornersTexture” represents the values of each voxel’s corners potential saved in a texture3D, this is generated from the point cloud animation and it’s evaluated against a iso level defined with the uniform named uRange.

The shader starts finding the 3D position for each fragment, then it evaluates the corresponding corners and checks if the value is below the required iso level (uRange), if the test passes the case variable is modified to finally define which case from the 256 combinations is the one to implement, this is saved in the “c” variable. Also notice that since the case 0 and 255 represent the case where all the vertices are below or over the iso level no triangles will be generated, hence the C value is defined as zero.

Once the marching cubes case is defined the uTrianglesIndex sampler is used to obtain the total amount of vertices to generate for the corresponding voxel. Finally the output of the shader is generated encoding the amount of triangles in the RGB channel and the marching cubes case in the alpha channel. The output of this program is a texture3D which has the active voxels scattered on a 2D texture, this means that a stream compaction pass should be applied to the output of this shader to calculate the corresponding vertices only in the active cells. The result of this step can be seen in the following image.

The same shader can me modified to write a second debugging texture that shows the amount of triangles allocated per voxel, this would give a visual representation of how things are working in the first step. The idea is to use multiple render targets and rewrite the output the shader as follows:

gl_FragData[0] = vec4(vec3(totalTrianglesToGenerate * 3.), c);

//Color implementation to check the amount of triangles required inside each voxel

gl_FragData[1] = vec4(0.);

if(totalTrianglesToGenerate == 1.) gl_FragData[1] = vec4(0., 0., 1., 1.);

if(totalTrianglesToGenerate == 2.) gl_FragData[1] = vec4(0., 1., 0., 1.);

if(totalTrianglesToGenerate == 3.) gl_FragData[1] = vec4(1., 0., 0., 1.);

if(totalTrianglesToGenerate == 4.) gl_FragData[1] = vec4(0., 1., 1., 1.);

if(totalTrianglesToGenerate == 5.) gl_FragData[1] = vec4(1., 0., 1., 1.);

The second output represent a color visualization of the amount of triangle to generate, color convention is defined below:

– blue: one triangle.

– green: two triangles.

– red: three triangles.

– blue + green (aquamarine): four triangles.

– blue + red (purple): five triangles.

The image below shows the visual representation obtained from the active cells step, notice that most of the cells require two triangles per voxel (green color), meaning that the algorithm would be calculating a median of six vertices per active voxel.

Stream Compaction and vertices generation:

Once the active voxels are allocated and each case is defined for them, a stream compaction method should be applied to the resulting texture avoiding the generation of triangles over non active voxels. To do so the current implementation uses the expansion ability of the histopyramids allowing to allocate offsets of one particular value in the output stream during the compaction process.

The compaction process is partially based on this post. In the implementation explained in the previous article one initial base texture is generated from the data texture to compress. This base texture is based on a binary image where each position represent where the data is allocated. Inserting the binary texture in the reduction process the algorithm returns the amount of data (values) in the texture.

Instead of using a binary texture, the base texture would be the result from the active voxels step, where each “active “ fragment represents the amount of vertices required for each active voxel. Using this texture in the reduction process allows to know the total vertices required to generate.

Once the reduction process obtains the total amount of vertices, the compaction reorganize the texture from the active voxels step, generating contiguous offsets of the same voxel information as many times as vertices are defined for each voxel. The following image shows the difference between a compaction of a binary texture and the texture obtained from the active voxels step.

Since the compaction process also returns a unique key for each offset, the result represents the memory required to allocate the amount of vertices to generate, arranged by voxels and indexed by local voxels offsets. This means that the same shader can be used the generate the vertices and normals using the marching cubes algorithm.

The new compaction/expansion and vertices/normals shader is shown below.

precision highp float;

precision highp sampler2D;

//Pyramid texture containing all the reduction steps.

uniform sampler2D uPyramid;

//Active voxels texture.

uniform sampler2D uBase;

//Potential corners values.

uniform sampler2D uPot;

//triTable data from Paul Bourke 64×64 texture

uniform sampler2D uTrianglesIndexes;

//level 0 of the pyramid.

uniform sampler2D uTotal;

//Uniform used to defined normals quality

uniform bool uFastNormals;

//iso level used to define the surface from the potential

uniform float uRange;

varying vec2 vText;

const float EPSILON = 0.00001;

//Inverse of the size from the 3D texture 256^3

const float p = 1. / 256.;

//Corners for any given voxel.

const vec3 p0 = vec3(p, 0., 0.);

const vec3 p1 = vec3(p, p, 0.);

const vec3 p2 = vec3(0., p, 0.);

const vec3 p3 = vec3(0., 0., p);

const vec3 p4 = vec3(p, 0., p);

const vec3 p5 = vec3(p, p, p);

const vec3 p6 = vec3(0., p, p);

//Constants used to simulate the 3D texture

const vec4 C3D = vec4(4096., 256., 16., 1. / 16.);

//Calculate the 2D index to read the data in the 2D texture based in a 3D position

vec2 index2D(vec3 pos) {

}

void main(void) {

float vI = dot(floor(C3D.x * vText), vec2(1., C3D.x));

//If the fragment’s key is higher than the total of vertices needed to create the execution is halted.

if(vI >= texture2D(uTotal, vec2(0.5)).r) discard;

else {

*This is the compaction process, it’s explained in http://www.miaumiau.cat/2016/10/stream-compaction-in-webgl/

*/

float offset = C3D.x – 2.;

float k = 1. / C3D.x;

vec2 relativePosition = k * vec2(offset, 0.);

vec4 partialSums = texture2D(uPyramid, relativePosition);

float start = 0.;

vec4 starts = vec4(0.);

vec4 ends = vec4(0.);

float div0 = 1. / C3D.y;

float diff = 2.;

vec4 m = vec4(0.);

vec2 position = vec2(0.);

vec4 vI4 = vec4(vI);

//12 steps are required to parse the different levels of the pyramid.

for(int i = 1; i < 12; i++) {

diff *= 2.;

relativePosition = position + k * vec2(offset, 0.);

ends = partialSums.wzyx + vec4(start);

starts = vec4(start, ends.xyz);

m = vec4(greaterThanEqual(vI4, starts)) * vec4(lessThan(vI4, ends));

relativePosition += m.y * vec2(k, 0.) + m.z * vec2(0., k) + m.w * vec2(k, k);

start = dot(m, starts);

position = 2. * (relativePosition – k * vec2(offset, 0.));

partialSums = texture2D(uPyramid, relativePosition);

}

ends = partialSums.wzyx + vec4(start);

starts = vec4(start, ends.xyz);

m = vec4(greaterThanEqual(vI4, starts)) * vec4(lessThan(vI4, ends));

position += m.y * vec2(k, 0.) + m.z * vec2(0., k) + m.w * vec2(k, k);

* MARCHING CUBES TO GENERATE THE VERTICES

* POSITIONS AND NORMALS

*/

//This data contains the 2D position of the voxel reallocated, the index offset for the vertex to generate in the corresponding voxel

//and the MC case used for that voxel.

vec4 data = vec4(position * C3D.x, vI – dot(m, starts), texture2D(uBase, position).a);

//Up to 15 vertices can be generated per voxel, the current vertex to generate is saved in this variable

float currentVertex = data.b;

//Calculate the 3D position of the voxel based on the 2D position in the scattered data.

data.xyz = p * vec3(mod(data.y, C3D.y), C3D.z * floor(data.y * p) + floor(data.x * p), mod(data.x, C3D.y));

//Obtain the one dimensional index to read the corresponding edge to use.

float mcIndex = 15. * data.a + currentVertex;

//Obtain the edge to use from the voxel using the previous index and reading the data from the triTable texture.

vec4 mcData = texture2D(uTrianglesIndexes, vec2(mod(mcIndex, 64.), floor(mcIndex / 64.)) / 64.);

mcIndex = mcData.r;

//To obtain the two points that define the edge the original implementation uses a set of if conditionals.

//The shader makes a sum of all the corners using masks to discard the values that are not needed.

vec4 m0 = vec4(mcIndex);

//Check if values are in the edge

vec4 m1 = vec4(equal(m0, vec4(0., 1., 2., 3.)));

vec4 m2 = vec4(equal(m0, vec4(4., 5., 6., 7.)));

vec4 m3 = vec4(equal(m0, vec4(8., 9., 10., 11.)));

//The two points are the voxel position plus the point active using the mask calculated before.

vec3 b0 = data.rgb + m1.y * p0 + m1.z * p1 + m1.w * p2 + m2.x * p3 + m2.y * p4 + m2.z * p5 + m2.w * p6 + m3.y * p0 + m3.z * p1 + m3.w * p2;

vec3 b1 = data.rgb + m1.x * p0 + m1.y * p1 + m1.z * p2 + m2.x * p4 + m2.y * p5 + m2.z * p6 + m2.w * p3 + m3.x * p3 + m3.y * p4 + m3.z * p5 + m3.w * p6;

//Potential values in the corresponding corners

vec4 n0 = texture2D(uPot, index2D(C3D.y * b0));

vec4 n1 = texture2D(uPot, index2D(C3D.y * b1));

vec2 diff1 = vec2(uRange – n0.a, n1.a – n0.a);

//Value used to evaluate the linear interpolation between the two corners points to define the position of the vertex to generate.

vec3 mult = vec3(lessThan(abs(vec3(diff1.x, uRange – n1.a, -diff1.y)), vec3(0.)));

vec3 normalA = vec3(0.);

vec3 normalB = vec3(0.);

*Gradients evaluations using forward differences

*or applying a 3D Sobel kernel.

*/

if(uFastNormals) {

vec2 deltaY = index2D(C3D.y * (b0 + vec3(0., p, 0.)));

vec2 deltaZ = index2D(C3D.y * (b0 + vec3(0., 0., p)));

normalA = normalize(-vec3(n0.a – texture2D(uPot, deltaX).a, n0.a – texture2D(uPot, deltaY).a, n0.a – texture2D(uPot, deltaZ).a));

deltaX = index2D(C3D.y * (b1 + vec3(p, 0., 0.)));

deltaY = index2D(C3D.y * (b1 + vec3(0., p, 0.)));

deltaZ = index2D(C3D.y * (b1 + vec3(0., 0., p)));

normalB = normalize(-vec3(n1.a – texture2D(uPot, deltaX).a, n1.a – texture2D(uPot, deltaY).a, n1.a – texture2D(uPot, deltaZ).a));

} else {

//this gives a more smoothed surface at the expense of less performance.

float op = 1.;

float scaler = 1.;

vec3 S1A_X = vec3(1., op, 1.);

vec3 S2A_X = vec3(op, scaler * op, op);

vec3 S3A_X = vec3(1., op, 1.);

vec3 S1B_X = vec3(0.);

vec3 S2B_X = vec3(0.);

vec3 S3B_X = vec3(0.);

vec3 S1C_X = vec3(-1., -op, -1.);

vec3 S2C_X = vec3(-op, -scaler * op, -op);

vec3 S3C_X = vec3(-1., -op, -1.);

vec3 S1A_Y = vec3(1., op, 1.);

vec3 S2A_Y = vec3(0., 0., 0.);

vec3 S3A_Y = vec3(-1., -op, -1.);

vec3 S1B_Y = vec3(op, scaler * op, op);

vec3 S2B_Y = vec3(0., 0., 0.);

vec3 S3B_Y = vec3(-op, -scaler * op, -op);

vec3 S1A_Z = vec3(-1., 0., 1.);

vec3 S2A_Z = vec3(-op, 0., op);

vec3 S3A_Z = vec3(-1., 0., 1.);

vec3 S1B_Z = vec3(-op, 0., op);

vec3 S2B_Z = vec3(-scaler * op, 0., scaler * op);

vec3 S3B_Z = vec3(-op, 0., op);

vec3 f1A = vec3(texture2D(uPot, index2D(C3D.y * (b0 + vec3(-p, p, p)))).a, texture2D(uPot, index2D(C3D.y * (b0 + vec3(0., p, p)))).a, texture2D(uPot, index2D(C3D.y * (b0 + vec3(p, p, p)))).a);

vec3 f2A = vec3(texture2D(uPot, index2D(C3D.y * (b0 + vec3(-p, 0., p)))).a, texture2D(uPot, index2D(C3D.y * (b0 + vec3(0., 0., p)))).a, texture2D(uPot, index2D(C3D.y * (b0 + vec3(p, 0., p)))).a);

vec3 f3A = vec3(texture2D(uPot, index2D(C3D.y * (b0 + vec3(-p, -p, p)))).a, texture2D(uPot, index2D(C3D.y * (b0 + vec3(0., -p, p)))).a, texture2D(uPot, index2D(C3D.y * (b0 + vec3(p, -p, p)))).a);

vec3 f1B = vec3(texture2D(uPot, index2D(C3D.y * (b0 + vec3(-p, p, 0.)))).a, texture2D(uPot, index2D(C3D.y * (b0 + vec3(0., p, 0.)))).a, texture2D(uPot, index2D(C3D.y * (b0 + vec3(p, p, 0.)))).a);

vec3 f2B = vec3(texture2D(uPot, index2D(C3D.y * (b0 + vec3(-p, 0., 0.)))).a, texture2D(uPot, index2D(C3D.y * (b0 + vec3(0., 0., 0.)))).a, texture2D(uPot, index2D(C3D.y * (b0 + vec3(p, 0., 0.)))).a);

vec3 f3B = vec3(texture2D(uPot, index2D(C3D.y * (b0 + vec3(-p, -p, 0.)))).a, texture2D(uPot, index2D(C3D.y * (b0 + vec3(0., -p, 0.)))).a, texture2D(uPot, index2D(C3D.y * (b0 + vec3(p, -p, 0.)))).a);

vec3 f1C = vec3(texture2D(uPot, index2D(C3D.y * (b0 + vec3(-p, p, -p)))).a, texture2D(uPot, index2D(C3D.y * (b0 + vec3(0., p, -p)))).a, texture2D(uPot, index2D(C3D.y * (b0 + vec3(p, p, -p)))).a);

vec3 f2C = vec3(texture2D(uPot, index2D(C3D.y * (b0 + vec3(-p, 0., -p)))).a, texture2D(uPot, index2D(C3D.y * (b0 + vec3(0., 0., -p)))).a, texture2D(uPot, index2D(C3D.y * (b0 + vec3(p, 0., -p)))).a);

vec3 f3C = vec3(texture2D(uPot, index2D(C3D.y * (b0 + vec3(-p, -p, -p)))).a, texture2D(uPot, index2D(C3D.y * (b0 + vec3(0., -p, -p)))).a, texture2D(uPot, index2D(C3D.y * (b0 + vec3(p, -p, -p)))).a);

float xGradient = dot(f1A, S1A_X) + dot(f2A, S2A_X) + dot(f3A, S3A_X) + dot(f1B, S1B_X) + dot(f2B, S2B_X) + dot(f3B, S3B_X) + dot(f1C, S1C_X) + dot(f2C, S2C_X) + dot(f3C, S3C_X);

float yGradient = dot(f1A, S1A_Y) + dot(f2A, S2A_Y) + dot(f3A, S3A_Y) + dot(f1B, S1B_Y) + dot(f2B, S2B_Y) + dot(f3B, S3B_Y) + dot(f1C, S1A_Y) + dot(f2C, S2A_Y) + dot(f3C, S3A_Y);

float zGradient = dot(f1A, S1A_Z) + dot(f2A, S2A_Z) + dot(f3A, S3A_Z) + dot(f1B, S1B_Z) + dot(f2B, S2B_Z) + dot(f3B, S3B_Z) + dot(f1C, S1A_Z) + dot(f2C, S2A_Z) + dot(f3C, S3A_Z);

normalA = vec3(zGradient, yGradient, xGradient);

f1A = vec3(texture2D(uPot, index2D(C3D.y * (b1 + vec3(-p, p, p)))).a, texture2D(uPot, index2D(C3D.y * (b1 + vec3(0., p, p)))).a, texture2D(uPot, index2D(C3D.y * (b1 + vec3(p, p, p)))).a);

f2A = vec3(texture2D(uPot, index2D(C3D.y * (b1 + vec3(-p, 0., p)))).a, texture2D(uPot, index2D(C3D.y * (b1 + vec3(0., 0., p)))).a, texture2D(uPot, index2D(C3D.y * (b1 + vec3(p, 0., p)))).a);

f3A = vec3(texture2D(uPot, index2D(C3D.y * (b1 + vec3(-p, -p, p)))).a, texture2D(uPot, index2D(C3D.y * (b1 + vec3(0., -p, p)))).a, texture2D(uPot, index2D(C3D.y * (b1 + vec3(p, -p, p)))).a);

f1B = vec3(texture2D(uPot, index2D(C3D.y * (b1 + vec3(-p, p, 0.)))).a, texture2D(uPot, index2D(C3D.y * (b1 + vec3(0., p, 0.)))).a, texture2D(uPot, index2D(C3D.y * (b1 + vec3(p, p, 0.)))).a);

f2B = vec3(texture2D(uPot, index2D(C3D.y * (b1 + vec3(-p, 0., 0.)))).a, texture2D(uPot, index2D(C3D.y * (b1 + vec3(0., 0., 0.)))).a, texture2D(uPot, index2D(C3D.y * (b1 + vec3(p, 0., 0.)))).a);

f3B = vec3(texture2D(uPot, index2D(C3D.y * (b1 + vec3(-p, -p, 0.)))).a, texture2D(uPot, index2D(C3D.y * (b1 + vec3(0., -p, 0.)))).a, texture2D(uPot, index2D(C3D.y * (b1 + vec3(p, -p, 0.)))).a);

f1C = vec3(texture2D(uPot, index2D(C3D.y * (b1 + vec3(-p, p, -p)))).a, texture2D(uPot, index2D(C3D.y * (b1 + vec3(0., p, -p)))).a, texture2D(uPot, index2D(C3D.y * (b1 + vec3(p, p, -p)))).a);

f2C = vec3(texture2D(uPot, index2D(C3D.y * (b1 + vec3(-p, 0., -p)))).a, texture2D(uPot, index2D(C3D.y * (b1 + vec3(0., 0., -p)))).a, texture2D(uPot, index2D(C3D.y * (b1 + vec3(p, 0., -p)))).a);

f3C = vec3(texture2D(uPot, index2D(C3D.y * (b1 + vec3(-p, -p, -p)))).a, texture2D(uPot, index2D(C3D.y * (b1 + vec3(0., -p, -p)))).a, texture2D(uPot, index2D(C3D.y * (b1 + vec3(p, -p, -p)))).a);

xGradient = dot(f1A, S1A_X) + dot(f2A, S2A_X) + dot(f3A, S3A_X) + dot(f1B, S1B_X) + dot(f2B, S2B_X) + dot(f3B, S3B_X) + dot(f1C, S1C_X) + dot(f2C, S2C_X) + dot(f3C, S3C_X);

yGradient = dot(f1A, S1A_Y) + dot(f2A, S2A_Y) + dot(f3A, S3A_Y) + dot(f1B, S1B_Y) + dot(f2B, S2B_Y) + dot(f3B, S3B_Y) + dot(f1C, S1A_Y) + dot(f2C, S2A_Y) + dot(f3C, S3A_Y);

zGradient = dot(f1A, S1A_Z) + dot(f2A, S2A_Z) + dot(f3A, S3A_Z) + dot(f1B, S1B_Z) + dot(f2B, S2B_Z) + dot(f3B, S3B_Z) + dot(f1C, S1A_Z) + dot(f2C, S2A_Z) + dot(f3C, S3A_Z);

normalB = vec3(zGradient, yGradient, xGradient);

}

//Save the vertex position, uses a linear interpolation between the two corners points of the edge and the iso value required.

gl_FragData[0] = vec4(mult.x * b0 + mult.y * b1 + mult.z * b0 + (1. – dot(mult, mult)) * mix(b0, b1, (diff1.x) / (diff1.y)), mcData.b);

//Save the vertex normal, calculate as the ponderated median of the two normals from the corners.

gl_FragData[1] = vec4(normalize(- (n0.a * normalA + n1.a * normalB) / max(n0.a + n1.a, EPSILON)), mcIndex);

}

}

This shader is divided into two steps, the vertices compaction/expansion process (highlighted in blue) and the vertex position and normal generation for each one of the vertices reallocated (highlighted in green). This fragment shader is also run over a quad that represents the 2D representation of the 3D voxel space. The uniforms for the programs are explained below.

– uPyramid: input texture that contains all the different levels of the pyramid generation, is created while doing the reduction process of the histopyramids.

– uBase: input texture containing the active cells result from the previous step.

– uPot: input texture containing the potential values of the corners from the voxels of the 3D world, calculated in the corners step.

– uTrianglesIndexes: input texture containing the edges where the vertices will be allocated, it’s based on the triTable from the Marching Cubes implementation of Paul Bourke.

– uTotal: input texture of 1×1 containing the total amount of vertices to generate, calculated during the reduction process, this data could also be read using the uPyramid texture.

– uFastNormals: input boolean value used to define if the normals are calculated using forward differences or a Sobel kernel.

– uRange: iso level used to define the surface required from the potential.

The shader starts evaluating if the one dimensional index key of the fragment to evaluate is lower than the total amount of vertices required, if it’s higher the vertex is discarded. This is done to avoid useless calculations over the quad. If the fragment’s key is below the shader keeps the execution.

The compaction process reallocate the vertices needed for each active voxels in the fragments that pass the first condition, it uses the masks explained in here using the optimizations for the reduction process and the compaction step.

The result for this first step is the corresponding 2D position of the voxel allocated in the active voxels texture, this 2D position will be repeated as many times as vertices are needed for the corresponding voxel in the subsequent fragments. Once this position is obtained the shader continues to the second part, the positions and normals generation.

The second part of the shader starts calculating the 3D position of the voxel based on the 2D position from the input texture, and it reads the marching cubes combination obtained in the active voxels step, finally it calculates the key offset (vertex number) of the fragment relative to the corresponding voxel.

With those values calculated the next step is to obtain the corresponding edge where the vertex will be allocated, this is done calculating an index used to read the data from the triTable texture containing the edges. The index is saved in the mcIndex variable, and the corresponding edge saved inside the variable called mcData.

Paul Bourke’s original implementation uses a set of if conditionals to check which edge to use depending on the vertex to calculate inside the voxel. In the current implementation the shader uses masks to discard the edges that are not required inside sums of all the possible corners and the position of the voxel. These sums returns the positions of the edge’s corners saved in the variables b0 and b1.

The corresponding potential values for these corners are read in the corners texture using b0 and b1 as input indexes, the results are saved in the n1 and n1 variables, then two more variables “diff1” and “mult” are saved to perform the linear interpolation that handles the position of the vertex over the edge.

For the gradient’s calculation (highlighted in yellow) the shader offers two choices, gradients calculated using forward differences or using a 3D Sobel operator, this last option gives more smooth gradients over the surface but penalize the performance of the shader. More information about the difference between these two options can be read in http://www.aravind.ca/cs788h_Final_Project/gradient_estimators.htm

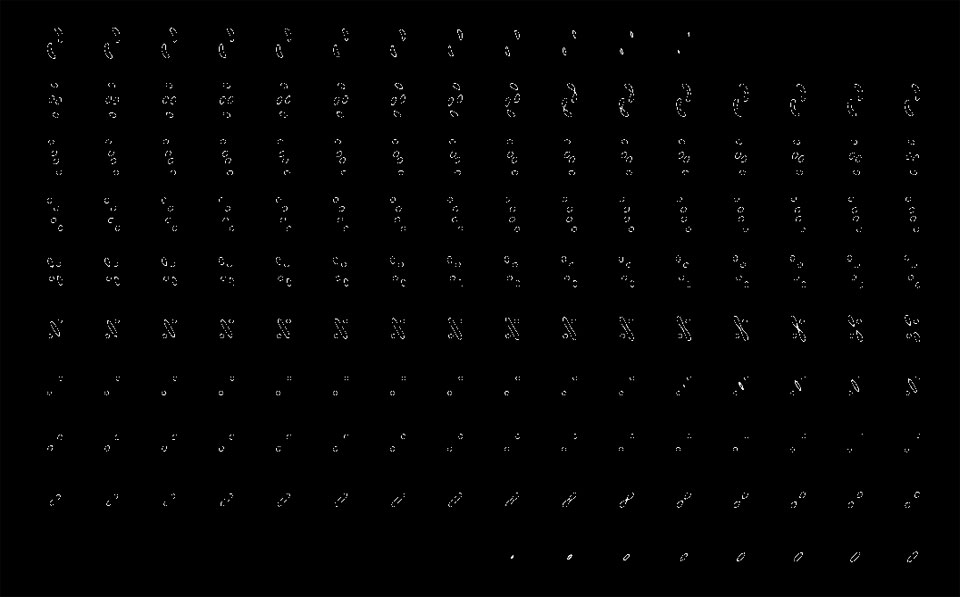

With the gradients calculated and the potentials of the corners obtained, the vertex normal and position is evaluated as a linear interpolation of the corner’s positions using the corresponding potentials datas and the iso surface value to make the interpolation. The shader outputs two textures with the compacted data for positions and normals shown below.

Vertex positions generated from the last step.

Vertex normals generated from the last step, encoded in the [0-1] space for visualization.

Notice that the current implementation recalculates vertices for adjacent triangles, meaning that much of the information from the last two textures is repeated. In order to implement the third method commented in http://http.developer.nvidia.com/GPUGems3/gpugems3_ch01.html a second histopyramid would be required.

The idea is to compact the information containing only the edges 0, 3 and 8 from the active cells step (with the second histopyramid) and calculate the corresponding data (vertex positions and normals for 0, 3 and 8 edges) with the last shader explained. The indexes can be calculated associating the original texture obtained in the active cells step, and the one with the 0, 3 and 8 edges.

The output of this implementation would be the positions, normals and indexes textures.

Tests done with this approach show that the current hystopyramid implementation becomes the bottleneck of the application in terms of time execution, hence requiring a second one for the indexes brings more penalties than benefits for performance. With a faster stream compaction method the idea of generating indexes would improve the performance of the algorithm in general.

Divergence and Branching:

The reader will notice that the last shader uses two conditional branches, one to discard the fragments where their 1D positional keys are higher than the total amount of vertices to generate, and a second one for the selection of the gradient type.

The gradient branching don’t present any divergence overt the threads since the instructions will be the same ones for each one of them, that’s because all the threads use the same boolean (uniform) passed to the shader.

The discard branching will present divergence on few warps/wavefronts since the data is compacted, this means that mostly each warp/wavefront containing 32 threads will execute the same code for all the fragments that generates positions and normals. On the other hand all the warps/wavefronts containing fragments with higher indexes would be grouped and discarded at the same time.

Divergence will be observed in the warps/wavefronts where there’s a group of fragment discarded, and the rest keep the execution (position/normal calculation), the different paths will force the warp to deal with both control paths and show a 50% performance loss on those warps. These warps would be allocated in the close to horizontal limit that separates the data generated and the empty data in the texture

This is one of the main reasons why data shouldn’t be calculated in a scattered fashion over a texture, in that case every warp could potential show divergence and performance would drop for each one of them, compaction limits the amount of warps where divergence happen.

Divergence can also be presented in the color implementation for the active cells evaluation, this is because the different colors are evaluated using a different set of if conditionals based on the result of each fragment’s different data, but since this is only meant for debugging no further optimizations where done in this section of the shader.

If the user would like to have a non divergence implementation for the color definition, it’s recommended to use float converted boolean masks on a weighted sum of the colors expected, it would represent more instructions, but all the threads will execute the same instructions at the same time.

More information about GPU pipeline, warps, branching and divergence can be found in the following articles https://developer.nvidia.com/content/life-triangle-nvidias-logical-pipeline and http://cs.nyu.edu/courses/spring12/CSCI-GA.3033-012/lecture5.pdf

The last part of these series will explain the javascript implementation of the shaders explained, and will provide different examples with different models at 128^3 and 256^3 resolutions.